TESTING A MULTIMODAL SMART GLASS-BASED SOLUTION FOR ON-THE-GO TEXT EDITING

On-the-go text editing is difficult, yet frequently done, forcing users into a heads-down posture which can be undesirable. EYEditor, a heads-up smart glass-based solution displays the text on a see-through peripheral display and allows text editing with voice and manual input.

Role:

Literature Research, Conducting the Pilot Study, Designing the Experiment, Conducting the Experiment and Interviews, Data Analysis and Visualization

Team:

Vinitha Reddy Erusu (Research Intern) Debjyoti Ghosh (Ph.D. in HCI)

Shengdong Zhao (Research Advisor)

Nuwan Janaka (Ph.D. student in HCI)

Yang Chen (Design Intern)

Duration:

4 months

June 2019- Sept 2019

Project Details:

Academic HCI Research Project

National University of Singapore Human-Computer Interaction Lab

RESEARCH PROCESS

THE OBJECTIVE

is to understand how the Smart Glass and phone compare in handling tradeoffs between text-editing and path navigation needs.

While the smartphone demands full visual attention and is generally used heads-down, the smart glass allows the user to share their visual bandwidth between the digital screen and the path.

To investigate the trade-offs in different situations, we considered two important factors:

the difficulty of the editing/correction task

the path difficulty

To understand the impact of the platform over the phone, we addressed the following

RESEARCH QUESTIONS

Q1: How does each platform handle the visual/cognitive demands of editing on the go?

Hypotheses: With users’ prior experience in phone-based text editing, we expect that on simple paths demanding less visual attention, the phone will outperform the glass. Conversely, on difficult paths, the glass will allow better focus on the path, but with lower correction efficiency. Yet, it is unclear if it would have any added advantage over the phone and how it would affect the users’ editing and path-navigation abilities for more difficult paths/tasks.

Q2: What role does posture play in the usability of each platform on various path types?

Hypotheses: We hypothesize that heads-down editing on the phone would be faster on simple paths due to minimal visual attention required on the path, while EYEditor would be more optimal on difficult paths for simple corrections. Yet, it is unclear how our system would compare to the phone when making difficult corrections on difficult paths.

Q3: Is our solution viable for future considerations?

Our criterion for viability is that EYEditor should offer additional benefits over using phones, especially on more challenging paths.

WE DESIGNED THE STUDIES

introducing different levels of realistic complexities one would experience while texting on-the-go.

3 PATH-COMPLEXITIES (Simple Path, Obstacle Path, Stair Path)

x

2 TEXT EDITING COMPLEXITIES (Easy, Hard)

x

2 TECHNIQUES (Phone, Glass)

With a total of 12 different conditions for each participant to go through.

Path Complexities

Text Complexities

2 Techniques

Smart Glass based platform

Phone platform (any text editor)

WE TESTED

with 12 participants.

12 Participants (6M,6F) Adults (18-36 yrs) Equal number of Native and Non-Native English Speakers to avoid speech recognition bias.

TO ELIMINATE THE CONFOUNDING VARIABLES

while conducting the experiment, by taking the following measures.

TESTING IN INDOOR LIGHTING CONDITIONS:

To ensure maximum text visibility, the studies were conducted in indoor lighting conditions.

RANDOMIZING THE ORDER OF CONDITIONS PRESENTED:

To minimize lurking variables, the 12 conditions were presented in random order to the participants.

TRAINING TO FAMILIARIZE PARTICIPANTS WITH THE SMART GLASS PLATFORM:

To ensure a fair comparison of the two platforms, before performing the conditions, we trained the participants to be familiarised with using our proposed platform for a total of 20-25 minutes.

ESTABLISHING THE PARTICIPANTS PREFERRED WALKING SPEED:

To accommodate for variations in people’s preferred walking speeds, before performing the conditions, we collected each participant’s preferred walking speed as a basis for comparing results.

RESULTING TESTING ENVIRONMENTS

To gather information on their performance of the conditions, we performed the following

PROCEDURES DURING THE EXPERIMENT

OBSERVATION & DATA COLLECTION WHILE PERFORMING THE TASK CONDITIONS:

Observing the difficulties faced by the participants while performing the different conditions.

Task Completion Time of the participants.

Errors Corrected by the participants.

Preferred Walking Speed of the participants.

Walking Speed of the participants.

POST-EXPERIMENT QUESTIONNAIRE:

After each technique, participants were given a NASA TLX questionnaire to measure observed subjective task load for each condition.

After completing all conditions participants were given a Subjective preference questionnaire to learn about their preferred conditions.

POST-EXPERIMENT INTERVIEW:

To get insights on their performance.

THE RESULTS

showed that the smart glass outperformed smartphones when either the path or the task became more difficult but not when both navigation and editing were difficult.

CORRECTION SPEED IN DIFFERENT CONDITIONS

Correction efficiency on the glass technique was significantly higher when either the path or the text editing task became difficult, but did not have a significant advantage when both the path and text editing task were either easy or difficult.

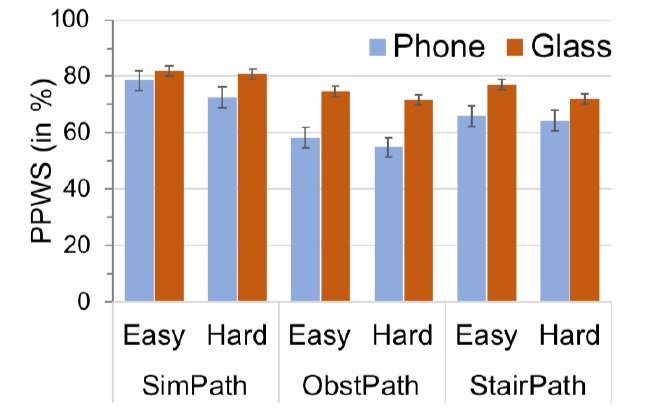

PERCENTAGE OF PREFERRED WALKING SPEED IN DIFFERENT CONDITIONS

Participants walking speed in the glass technique was significantly closer to their preferred walking speed for both Easy and Hard tasks in the Obstacle Path, but was not significantly closer for the other paths and task difficulties.

PREFERRED TECHNIQUE IN DIFFERENT CONDITIONS

About 92% of the participants reported that smart glasses can be a viable alternative to the phone for on-the-go text editing. Another report of their preferred technique for various conditions is shown in the above graph.

QUANTITATIVE RESULTS

SUBJECTIVE TASK LOAD

The overall task load with the glass was significantly lower than with the phone.

There was a general consensus among participants that alternating attention between text on the glass screen and the visual surrounding felt much easier and more seamless as compared to the phone.

For difficult paths, 25% of the participants believed that the glass’s flexibility can instill a false sense of security while in effect drawing their attention away from hazards, however, 75% agreed that the glass would be more suitable for navigating difficult paths.

Video logs and interview data revealed that the path challenges of StairPath were more easily detected heads-down. Since participants were correcting on the phone heads-down, it was “just a matter of glancing sideways” to be sure of their footing on the stairs, whereas, with the glass, the participants had to shift posture from heads-up to heads-down, which created some discomfort and delay.

75% of the participants mentioned that the learning curve for using our system felt short and they could easily and quickly adapt to the system. Whereas, 25% of participants had an ongoing preference of the phone and reported that they were more confident with the phone as they were familiar with it.

QUALITATIVE RESULTS

Overall the study shows that our smart glass solution, EYEditor, offered certain advantages over the phone and helped maintain better path awareness. Hence, EYEditor might potentially be safer to use on the go. Yet, we found there is a cognitive bottleneck when both the editing and navigation were more challenging, where the advantages of EYEditor become less salient especially while walking downstairs.

CONCLUSION